1. An F# wrapper for Weka. The minimal wrapper in F# for Weka.

2. More features. (this post) This post will contain improvement over the minimal wrapper, e.g. more Dataset processing function, some plot functionality, etc.

3. Tutorial/Usage examples. This post is for end users, who might have no interested in reading the implementation details, but rather knowing how to use this wrapper to perform data mining tasks in .Net.

Here lists a set of features that have been added to WekaSharp.

Parameters for data mining algorithms.

F# features: named parameters and pattern matching.

Data mining algorithm usually have some parameters to set before applying to the data. E.g. the K-means clustering needs the user to provide the number of clusters, the Support Vector Machine classification algorithm need the user to provide the kernel type of the SVM to use, etc.

Also different algorithms have different set of parameters. In Weka, algorithm parameters are encoded as a string (similar to the parameter style of command line tools). However, a first time user would not be able to remember what does “-R 100” mean for a specific algorithm. So we’d like to have a parameter maker for each algorithm. F# fully supports this task with its named parameters.

Let’s see an example. The following code is for making parameters for Logistic Regression:

type LogReg() =

static member DefaultPara = "-R 1.0E08 -M -1"

static member MakePara(?maxIter, ?ridge) =

let maxIterStr =

let m = match maxIter with

| Some (v) -> v

| None -> -1

"-M " + m.ToString()

let ridgeStr =

let r = match ridge with

| Some(v) -> v

| None -> 1.0E08

"-R " + r.ToString()

maxIterStr + " " + ridgeStr

and this is an example usage of it:

let logRegPara = LogReg.MakePara (ridge = 1.0)

You can see that besides named parameter functionality, we can also only supply part of the parameters.

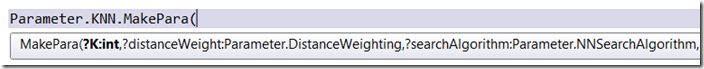

Another highlight F# feature in this the Parameter module is pattern matching. Sometimes, the parameters could be quite complicated, e.g. the nearest neighbor search algorithm used in KNN. There are 4 search algorithms, each of them is capable for some of the distance metrics. Discriminate unions and pattern matching make the parameter generating task easy:

type DistanceFunction = Euclidean | Chebyshev | EditDistance | Manhattan

with

static member GetString(distanceFunction) =

let d = match distanceFunction with

| Some (v) -> v

| None -> DistanceFunction.Euclidean

match d with

| DistanceFunction.Euclidean -> "weka.core.EuclideanDistance -R first-last"

| DistanceFunction.Chebyshev -> "weka.core.ChebyshevDistance -R first-last"

| DistanceFunction.EditDistance -> "weka.core.EditDistance -R first-last"

| DistanceFunction.Manhattan -> "weka.core.ManhattanDistance -R first-last"

let distanceFunctionStr = DistanceFunction.GetString distanceFunction

let searchAlgorithmStr =

let s = match searchAlgorithm with

| Some (v) -> v

| None -> NNSearchAlgorithm.LinearSearch

match s with

| NNSearchAlgorithm.LinearSearch ->

"weka.core.neighboursearch.LinearNNSearch" + " -A " + "\"" + distanceFunctionStr + "\""

| NNSearchAlgorithm.BallTree ->

"weka.core.neighboursearch.BallTree" + " -A " + "\"" + distanceFunctionStr + "\""

| NNSearchAlgorithm.CoverTree ->

"weka.core.neighboursearch.CoverTree" + " -A " + "\"" + distanceFunctionStr + "\""

| NNSearchAlgorithm.KDTree ->

"weka.core.neighboursearch.KDTree" + " -A " + "\"" + distanceFunctionStr + "\""

If you are interested, you can browse the Java code(in Weka’s GUI component) for this task and appreciate how succinct F# gets.

More Dataset manipulating functions

Weka’s dataset class(Instances) is very hard to use. Normally, a dataset is simply a table, with each row representing an instance and each column representing a feature. The dataset class in Weka is too general to be manipulated easily. Weka provides various filters to manipulate datasets. However, these filters are hard to remember their names and applying a filtering needs several lines of code.

I first write a function that transforms a 2 dimensional array into Weka dataset; it can transform the in-memory data more easily into a dataset.

let from2DArray (d:float[,]) (intAsNominal:bool) The parameter intAsNominal=true means that if a columns are all integers, then treat this column as a nominal column. Otherwise all columns are treated as real ones.

I also wrapper common filters into single functions, that means, applying one filter only uses one line code and they are all put in Dataset module.

The parallel processing

The PSeq module (in F# PowerPack) makes writing computing-bound parallel programs effortless. And in data mining, when it comes to parameter tuning or trying different models on different datasets, it would be fast to run these tasks in parallel on a multi-core machine.

The following code shows two functions performing a bulk of classification tasks:

let evalBulkClassify (tasks:ClaEvalTask seq) =

tasks

|> Seq.map evalClassify

|> Seq.toList

let evalBulkClassifyParallel (task:ClaEvalTask seq) =

task

|> PSeq.map evalClassify

|> PSeq.toList

The latter one is the parallel version, which simply change the Seq in the sequential version to PSeq.